Representing Objects in Video as Space-Time Volumes by Combining Top-Down and Bottom-Up Processes2020 Winter Conference on Applications of Computer Vision |

We show how to combine low-level video segmentation, which often lack semantic knowledge, with a masking network to get the best of both worlds. Object tracking and decomposition into parts: Our approach (right) bridges the conceptual gap between low-level video representations such as (left) Temporal Super-Pixels which tend to fall apart over time and do not model objects directly, and instance segmentation methods such as (center) Mask R-CNN which lack a decomposition into parts, and temporal consistency. Our unsupervised approach is able to detect salient object parts based on motion and appearance.

Overview and Examples

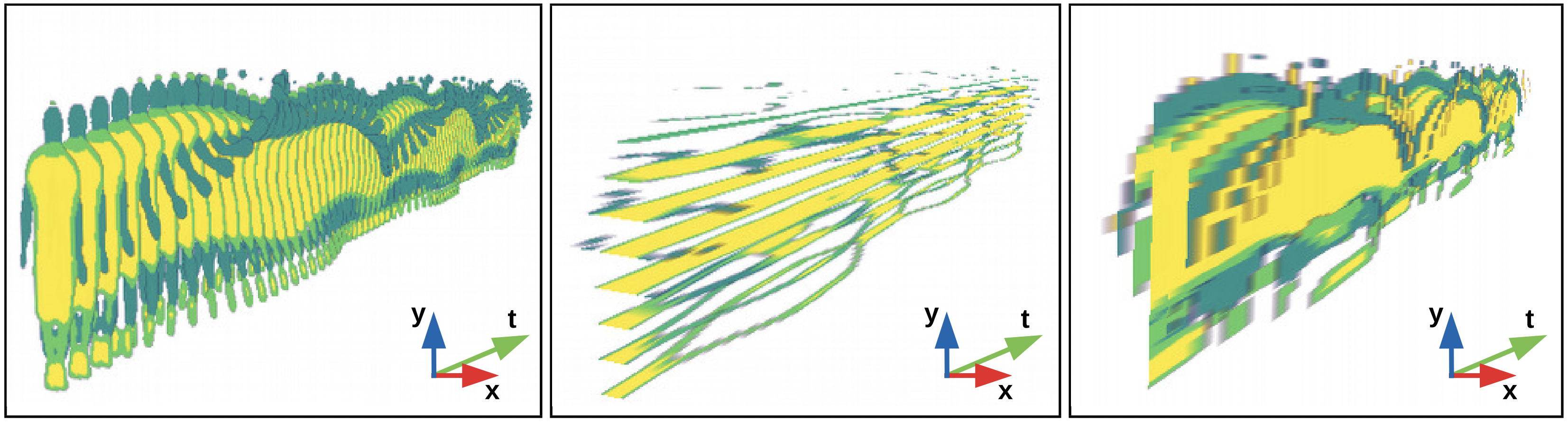

Different object scales: Space-Time Volumes of Interest extracted at increasing spatial and temporal scales from a jumping-jack sequence. Note how finer scales capture more detail at the cost of temporal and spatial coherence, whereas coarser scales model more fundamental patterns.

The extracted representation is inherently a volumetric one, allowing us to slice along any of the axis.

This can reveal interesting details such as the tube in the center image formed by the oscillating motion of the legs.

The extracted representation is inherently a volumetric one, allowing us to slice along any of the axis.

This can reveal interesting details such as the tube in the center image formed by the oscillating motion of the legs.

Viewer/Object-centered representation: The trajectory w.r.t. the observer is shown on the left. The relative motion of object parts w.r.t. the object centroid is shown on the right. This is useful because objects are represented as the sum of their parts, and not just as instance masks.

Supplement

The DAVIS Parts Dataset contains a variety of densely annotated objects and their parts. We envision this dataset being used for object part tracking and detection.

Cite

@InProceedings{ilic20wacv,

author = {Ilic, Filip and Pinz, Axel},

title = {Representing Objects in Video as Space-Time Volumes by Combining Top-Down and Bottom-Up Processes},

booktitle = {The IEEE Winter Conference on Applications of Computer Vision (WACV)},

month = {March},

year = {2020}

}